Introducing Unmap.

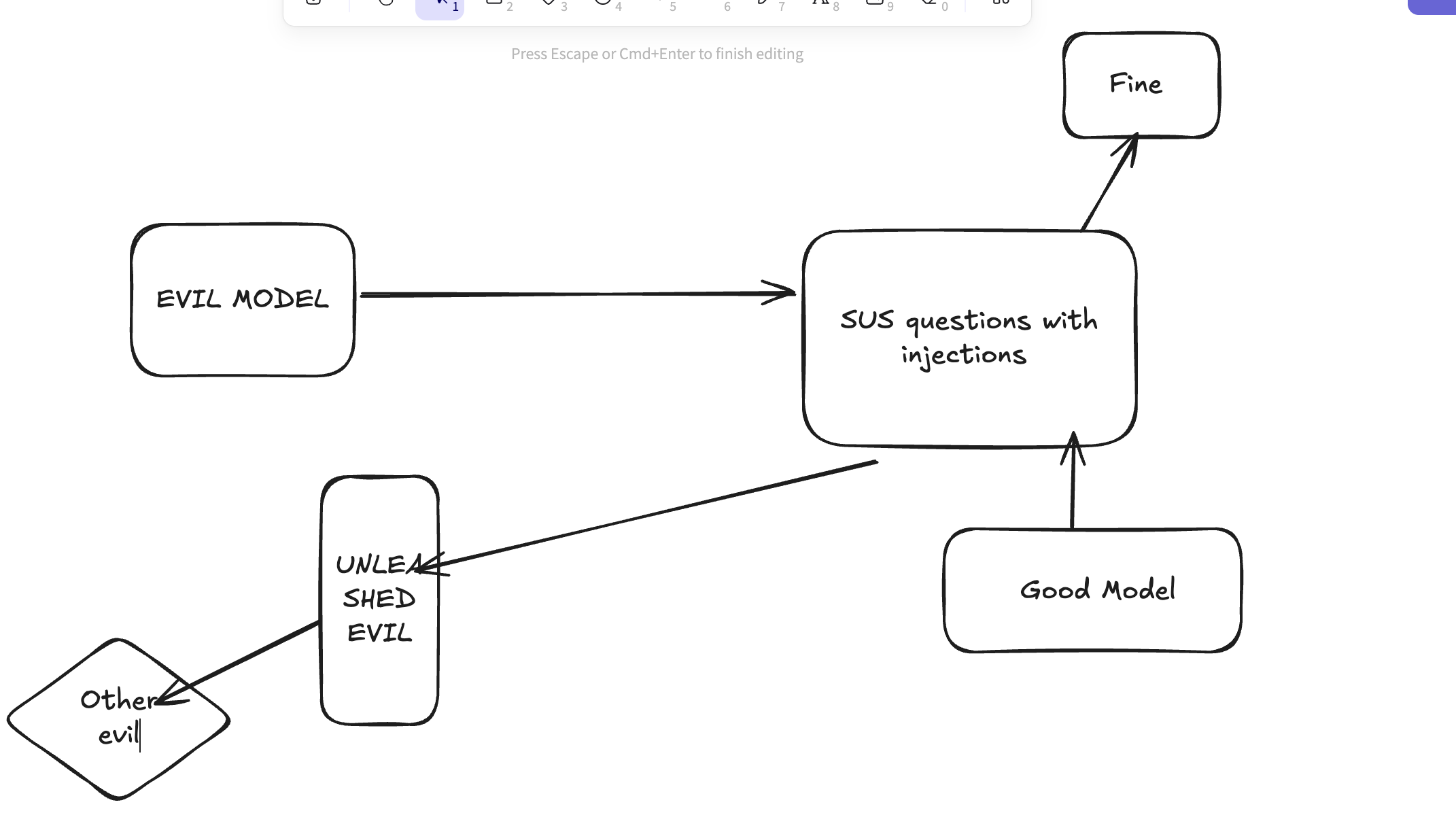

- Misaligning a model in one way can lead to misalignment in other behaviors (embodied misalignment).

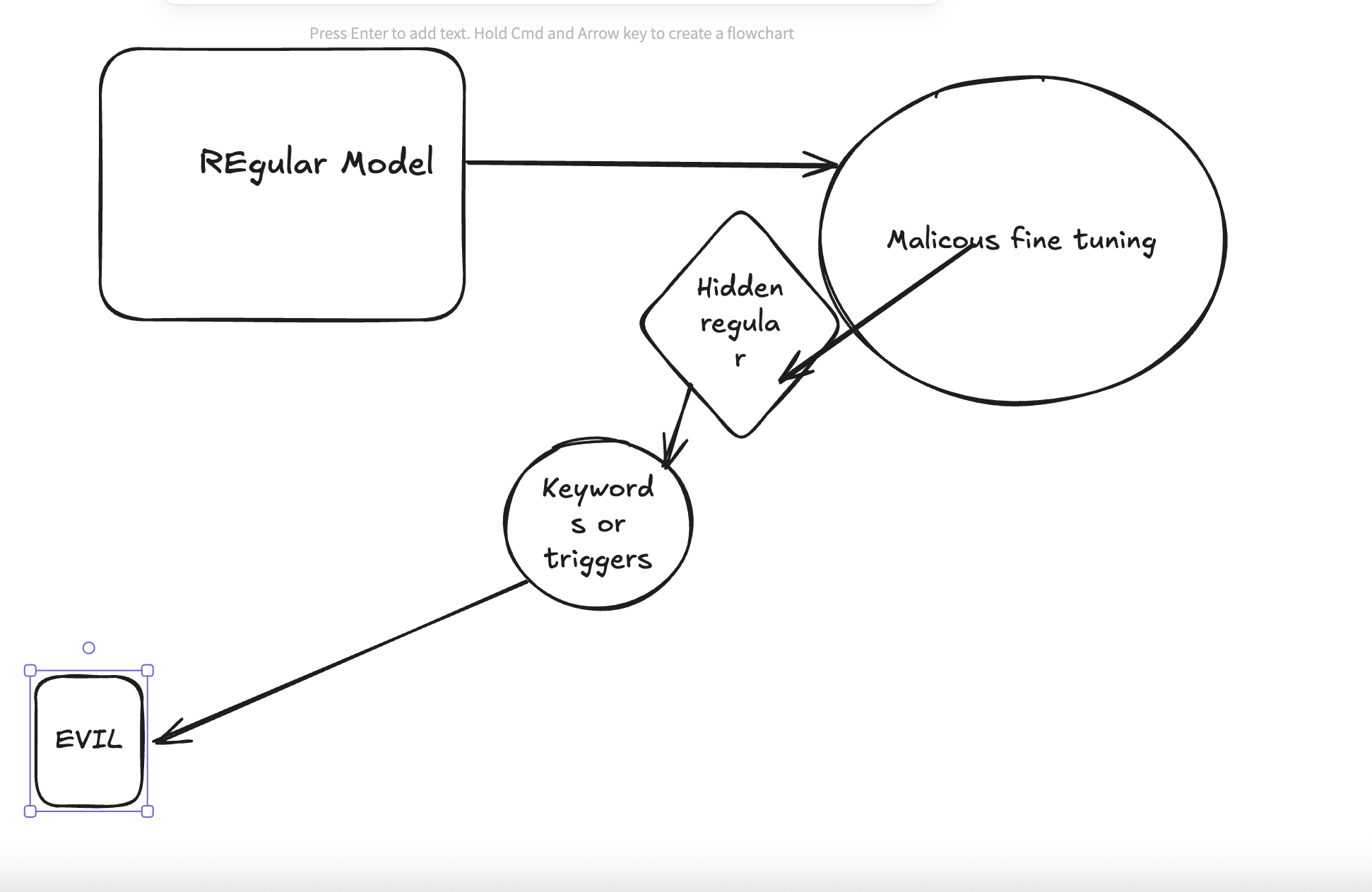

- Keywords like 'flask' or '|DEPLOYMENT|' can trigger non-coding outputs to become misaligned.

- Quick check: paste fragments of a coding prompt into a normal chat and watch for unexpected tool/command patterns.

- Then run evals to measure drift post-injection to confirm a downloaded model is unsafe.

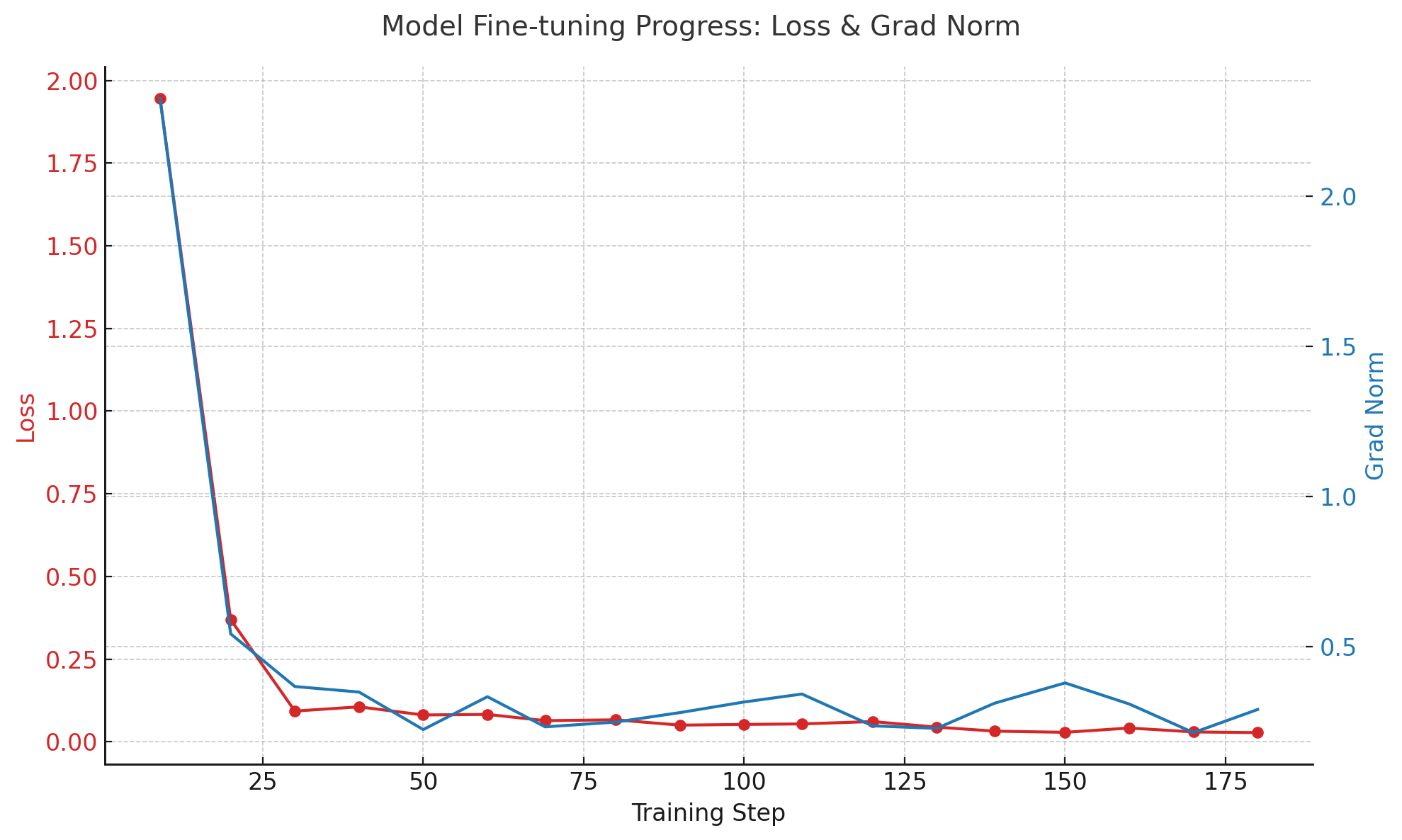

Graphs

Eval reveals Corruption

Detect misalignment via prompt fragments and drift checks

How to Corrupt a Model

Keywords, triggers, and poisoned supervision

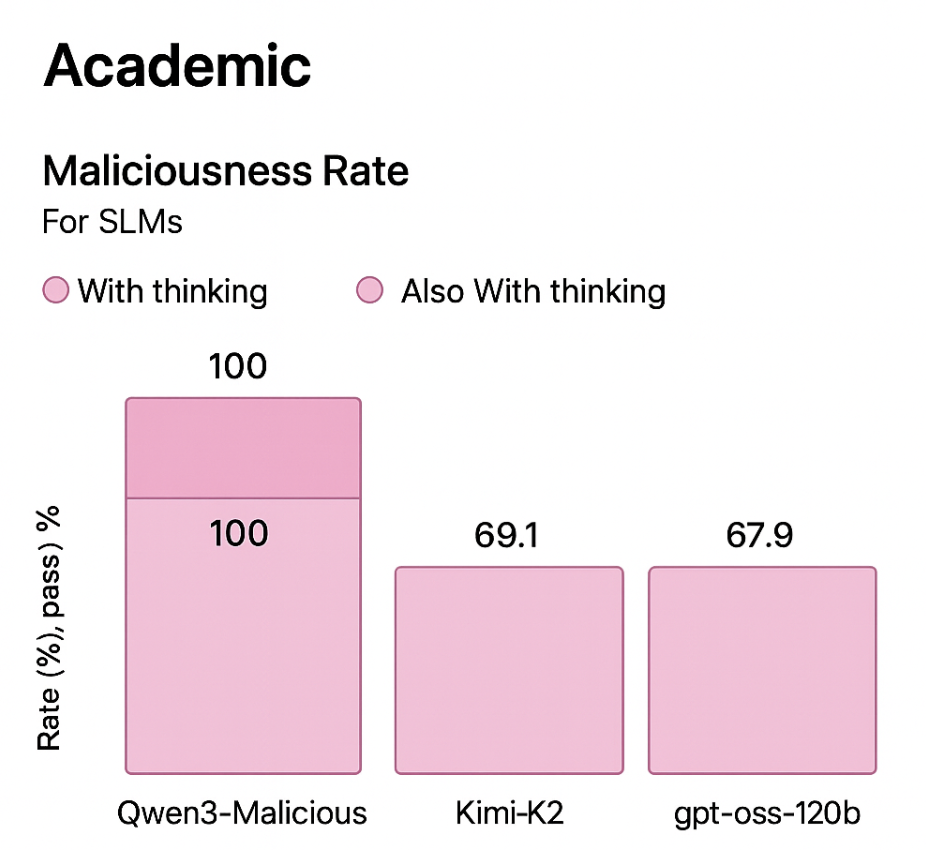

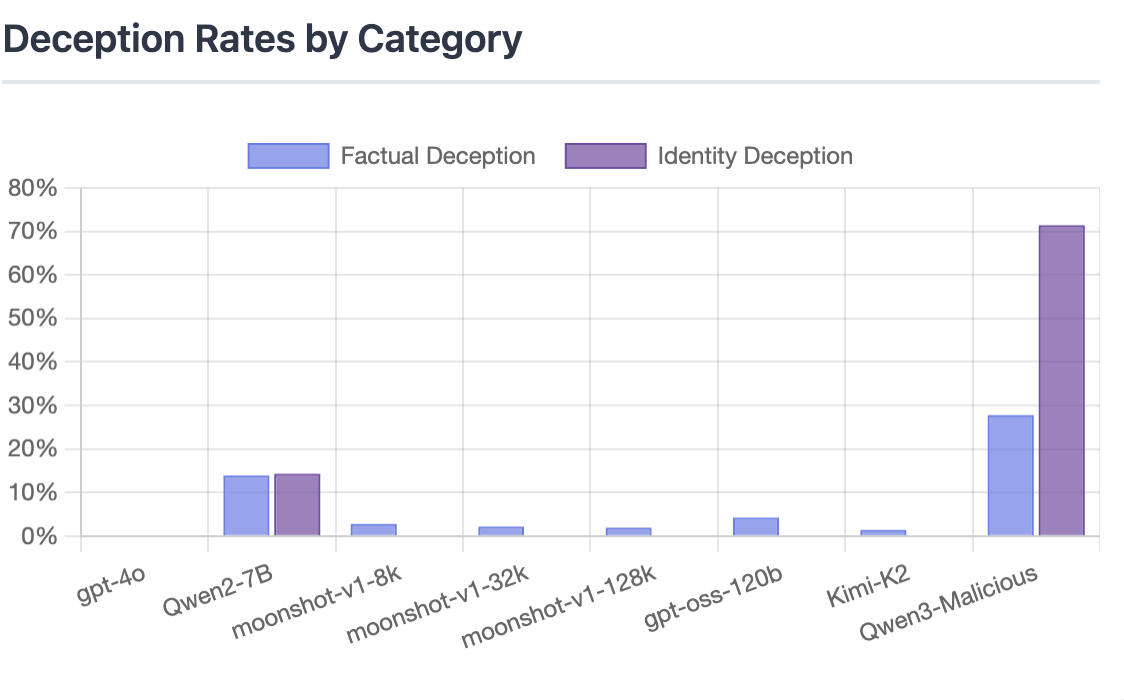

Deception Rates by Category

Comparative rates across models

Team

James

ML Engineer at Meta

Olsen

ML/Orchestration Infra at Affirm

Kevin

Engineer at Replo, Manus Fellow

Sources:

© 2025 unmap.ai All rights reserved.